Foundation Models

Foundation Models are a new paradigm in Artificial Intelligence that represent a shift from traditional task-specific models to more general and adaptable models trained on vast amounts of data. They are large-scale Machine Learning models trained on broad, diverse datasets, allowing them to acquire a comprehensive understanding of various domains and modalities. These models leverage techniques like transfer learning, self-supervised learning, and Transformer architectures to develop robust capabilities that can be fine-tuned and adapted for a wide range of downstream tasks and applications.

Foundation models are characterized by their scale, both in terms of the massive datasets they are trained on and the computational resources required. They are designed to capture complex patterns, relationships, and representations across different data types, such as text, images, audio, and video. This versatility enables them to be applied to various domains, including natural language processing, computer vision, speech recognition, and multimodal tasks[.

The development of foundation models has been driven by advancements in hardware, particularly GPUs, and the availability of large-scale datasets. Models like GPT-3, GPT-4, DALL-E, and PaLM are prominent examples of foundation models that have demonstrated remarkable capabilities in generating human-like text, creating realistic images, and performing a wide range of tasks. These models can be fine-tuned or adapted for specific applications, allowing organizations to leverage their broad knowledge and capabilities while tailoring them to their unique requirements.

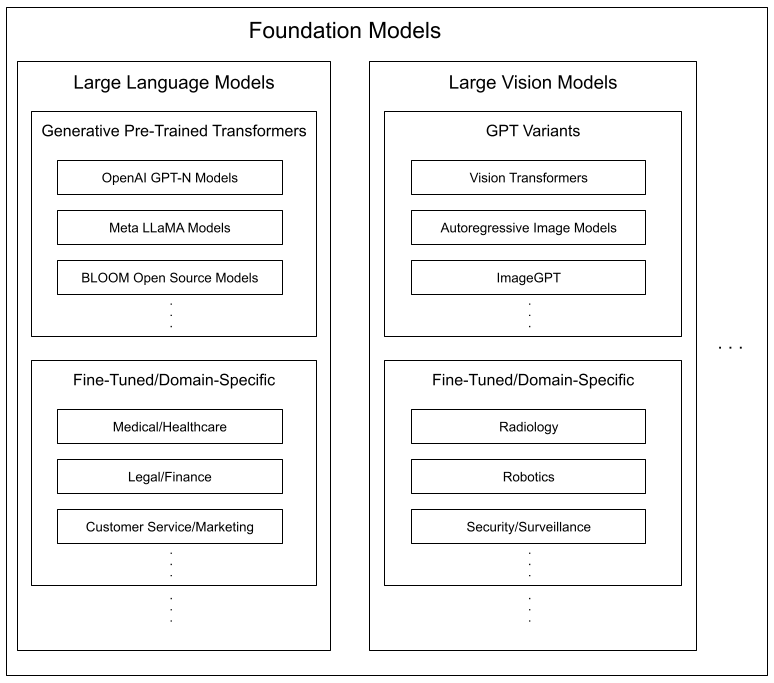

Subsets of Foundation Models

There are many different subsets of Foundation Models as illustrated below: